Robot.txt file have a very important role in SEO. In Simple term robots.txt is a text file that instruct search engine bot to crawl a website.. It has a set of Instruction for a search engine to crawl a website and list the webpages in their search result. It is easy to create rule what Search Engine crawl and what not. In this post, I will talk about how to test your robot.txt file.

When a site owner wishes to give instructions to web robots they place a text file called robots.txt in the root of the web site hierarchy. This text file contains the instructions in a specific format. Robots that choose to follow the instructions try to fetch this file and read the instructions before fetching any other file from the website. If this file doesn’t exist, web robots assume that the website owner does not wish to place any limitations on crawling the entire site.

How to Validate your robots.txt Using Google Search Console-:

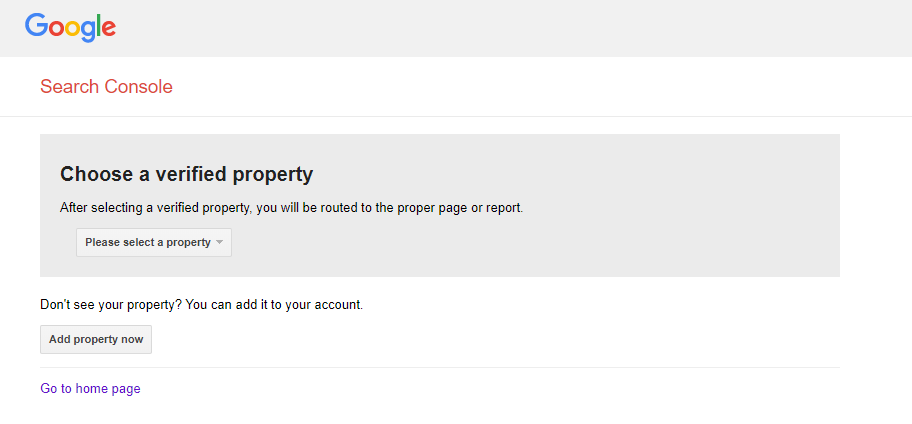

Step.1-: Head over to Google Search Console Testing Tool and add the property.

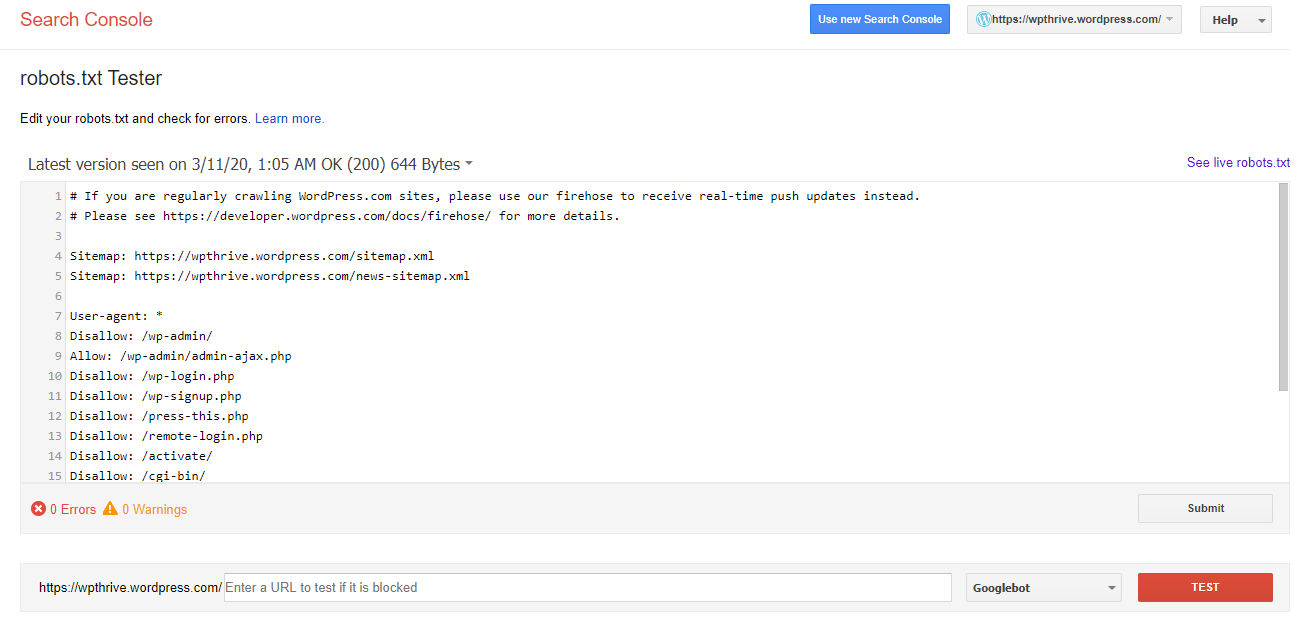

Step.2-: After adding the property you will get the option to Test.

Step.2-: After adding the property you will get the option to Test.

If you do not find any error or you want to add some rule you can do that and submit it to google.

Thanks for reading…“Pardon my grammar, English is not my native tongue.”

If you like my work, Please Share on Social Media! You can Follow WP knol on Facebook, Twitter, Pinterest and YouTube for latest updates. You may Subscribe to WP Knol Newsletter to get latest updates via Email. You May also Continue Reading my Recent Posts Which Might Interest You.